Monday, March 24, 2025

Why Does Excel Excel?

You've probably mastered this excellent little application called Microsoft Excel.

Excel is extremely popular. So much so that many professionals working in an actuarial role might spend 8 hours a day working in Excel.

The 10,000 hour rule, popularized by Malcolm Gladwell in his book Outliers, postulates that it takes 10,000 hours of practice to master something.

An Actuary will spend ~2,000 hours working per year, minus a few if you have a good deal on PTO. That means that many of you will master Excel after a little over 5 years working in the actuarial profession.

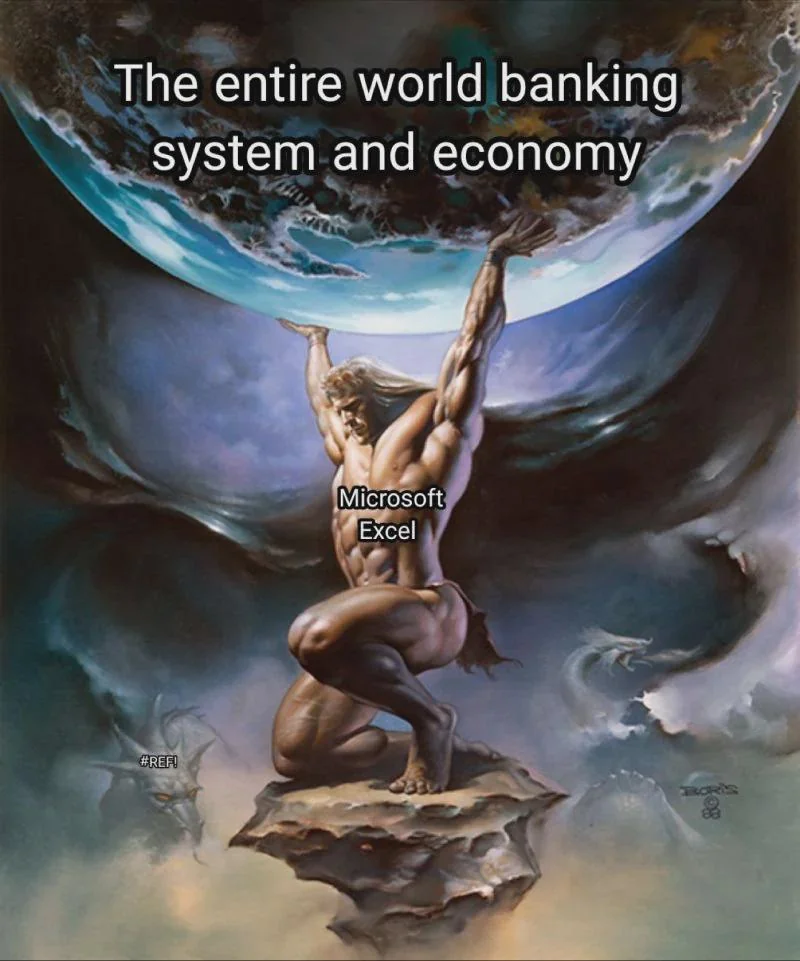

Excel is ubiquidous in banking and finance, leading to excellent memes such as this one:

But why is Excel so popular? To understand that, we need to ask the question: "What is Excel extremely good at?".

Why does Excel excel?

Excel is supreme at one particular thing: Communication.

Excel allows an Actuary to quickly aggregate and summarize small data sets (in the age of big data, 1048576 rows is not very many). The Actuary can then make the results available to technical and non-technical audiences.

The other thing that Excel does well is: Prototyping/Replication.

This is particularly true for actuarial models, which don't often require complicated algorithms or data structures.

Building a model prototype or replicator in Excel is an excellent a fantastic way to invite feedback

because Excel can communicate the underlying logic to colleagues.

These two superpowers are very compelling. But still Excel gets a lot of hate. So we have to ask the opposite question: "What is Excel terrible at?".

Why does Excel repel?

Excel is epicly terrible at one thing: Processing.

Excel just doesn't run as fast as the other kids on the playground. And this is especially true as files become large and unwieldy.

In order to reliably process data, Excel needs to contain it. Otherwise you have external references, which lead to endless nightmares. As an Excel file grows in size, it becomes more and more non-performant. Hopefully you've never had to open a 100MB Excel file. If you have, you know what I'm talking about.

Another thing Excel struggles with: Version Control.

With programming, you have a very powerful tool called git which performs version control. So it's really easy to know what version of your code you're running. With Excel, you have to do that manually. For example, suppose you have an Excel testware file and you need to run it with 5 different mortality tables. How do you know which one is currently in use? By manually tracking it. Not ideal.

One final blemish on Excel's legacy (then I will switch back to positive stuff): Reusing Code.

There's a principle in programming called Don't Repeat Yourself (DRY). Excel has never heard of it. DRY means that you make your code reusable whenever possible so that you don't have to repetitively write the same thing over and over. This has the glorious benefit that whenever you need to change your code, you only have to change it in one place. Excel makes this ridiculously hard. Excel makes this ridiculously hard. Excel makes this ridiculously hard.

Let's take an example. Suppose you have an indexed annuity with funds allocated to 5 different strategies. You need to project fund value for each fund separately. The sane programmer will approach this by creating reusable code that governs the projection of a fund, then caling the same code for each fund, but with 5 different configurations.

In Excel, you're gonna do it by making a tab for each fund (or you could make one tab with 5x the columns, but that's even worse). And each tab needs its own set of formulas. And whenever you need to change the fund projection logic, you need to do it in 5 places. And nothing guarantees that you won't make a manual error in doing so. Good luck.

Of course, you could work around this limitation by using a macro with some fancy Evaluate calls. I didn't say it was impossible, just extremely difficult.

So What?

So now, in the context of actuarial modeling, how can we make use of the things Excel excels at while avoiding its pitfalls?

There are two common approaches that actuarial modeling systems take to using Excel.

1. Use Excel as the actual system

Here, we are getting all the downsides. We are just going to implement our entire actuarial model in Excel. And use it for everything. A wonderful thought. And this does give us a lot of transparency.

But the problems are, you need a lot of Processing, you have to have good Version Control, and you require Code Reuse. You're just going to end up paying 7 figures of salary to a team to get endless headaches in return.

2. Treat Excel as an afterthought

Now let's ignore all of Excel's upsides. We are just going to spit out hardcoded values from our "real" actuarial modeling system into Excel. This is probably a bit better, but we lose the transparency.

If someone wants to see your calculation logic, either they can't (because you're using a black box) or they must learn a new technology. You will have a much more difficult time with Communication and Replication.

Xval's approach

Xval gets the benefits of Excel without the pitfalls by automatically creating Excel audit files with formulas populated for any model calculations. This powerful audit feature is built into xact, the domain-specific programming language that powers Xval.

Xval is different from other systems because we believe that Excel is an important tool for actuaries, but it shouldn't be used to manage models. Instead, we use Excel as a power communication tool to make Xval maximally transparent.

Next Steps

If you want to learn more, you can checkout out a blog post and associated YouTube video demoing Xval's Excel audit functionality.

You can try Xval for yourself at demo.xval.io. If you want to reach out to learn more, you can find me on Linkedin or email me a timothy dot quast @xval.io.